Robust Autonomy in Complex Environments

We design perception, navigation and decision-making algorithms that help mobile robots achieve robust autonomy in complex physical environments. Specific goals of our research include improving the reliability of autonomous navigation for unmanned underwater, surface, ground and aerial vehicles subjected to noise-corrupted and drifting sensors, bandwidth-limited communications, incomplete knowledge of the environment, and tasks that require interaction with surrounding objects and structures. Our recent work uses artificial intelligence to improve the situational awareness of mobile robots operating in degraded conditions, and to enable intelligent robot decision-making under uncertainty.

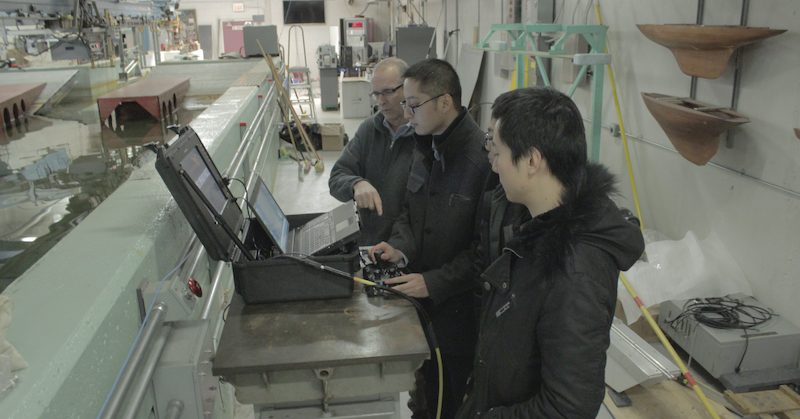

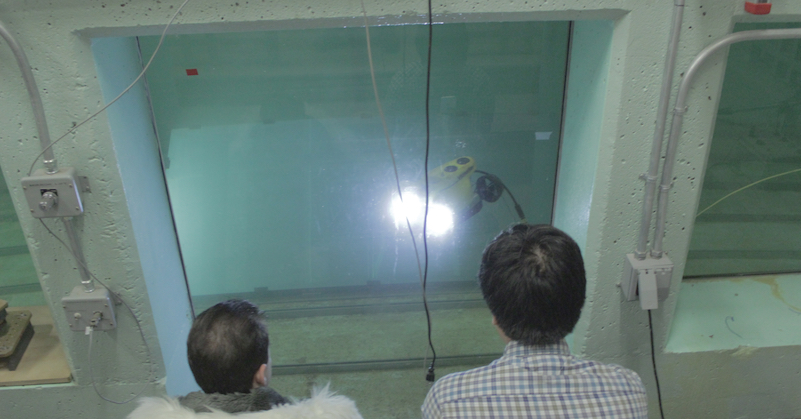

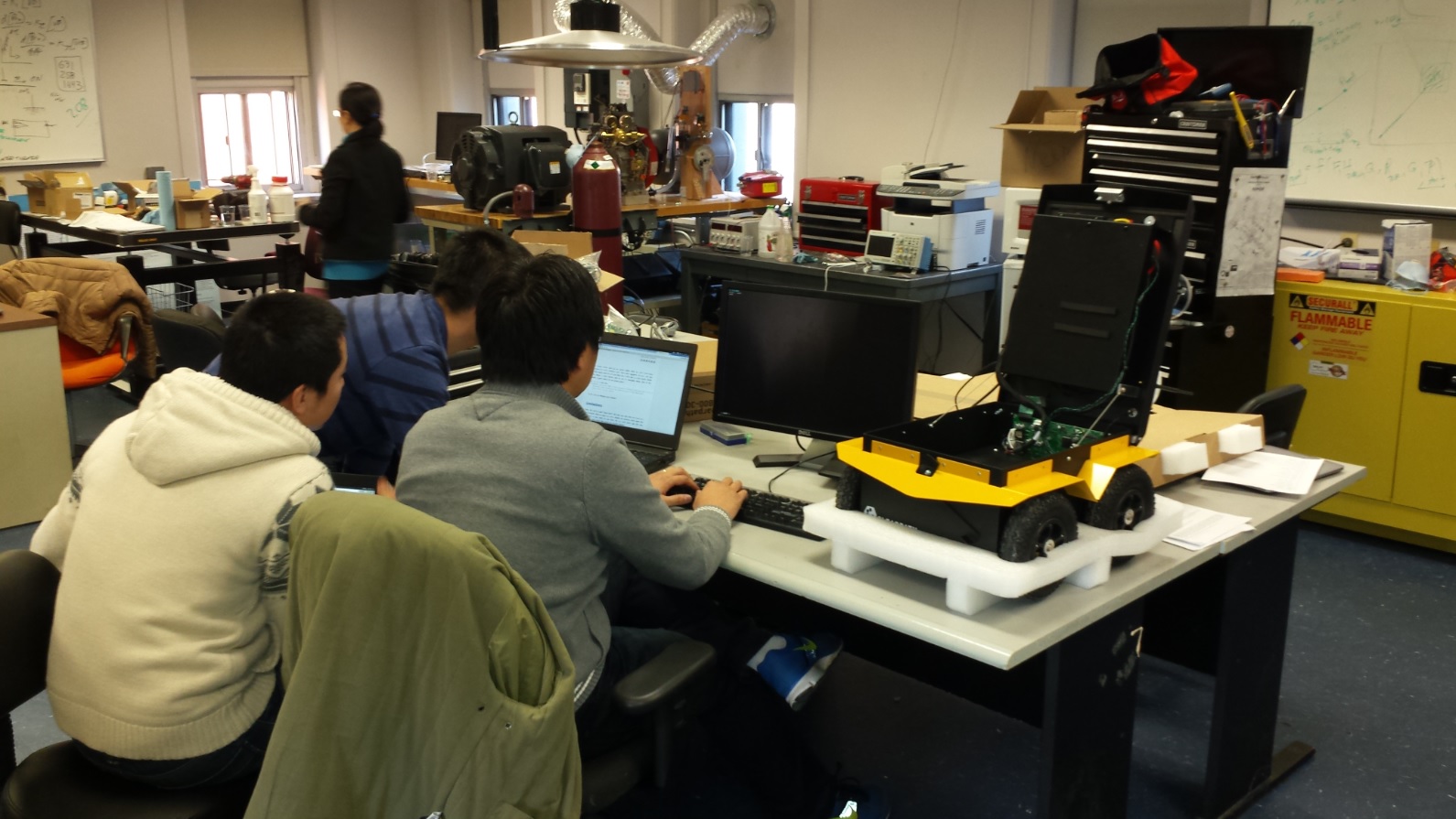

Top: Testing an ROV in Stevens'

Davidson Laboratory tank; testing a custom-built mobile manipulator in our lab.

Center:

Acoustically mapping the pilings of Stevens' Hudson River pier with our custom-built BlueROV.

Bottom:

Bench-testing our Clearpath Jackal unmanned ground vehicle after a

field experiment in Hoboken's Pier A Park.

Recent News:

IROS 2025 Field Robotics Keynote Talk

Lab director Prof. Brendan Englot was invited to give a Keynote Talk in the Field Robotics Session of IROS 2025 in Hangzhou, China. The talk provides an overview of the lab's recent research in marine robotics, including many of the papers highlighted below.

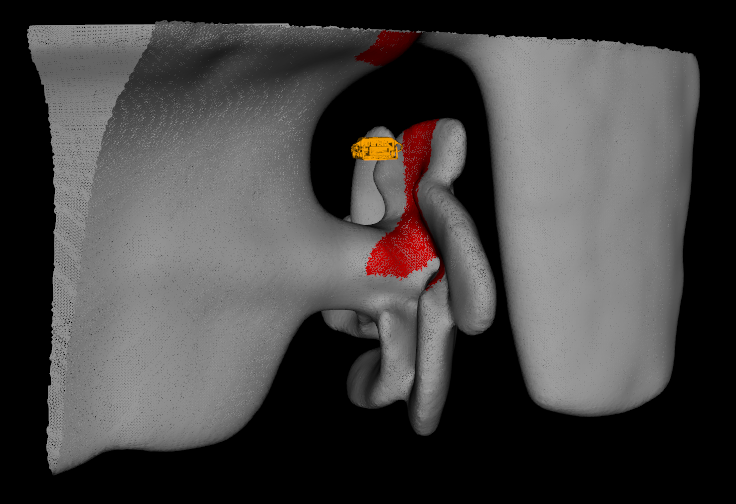

IROS 2025 Papers on Robot Perception

We are excited to present three new papers at IROS 2025 addressing problems in robot perception. The first paper, "Opti-Acoustic Scene Reconstruction in Highly Turbid Underwater Environments," achieves real-time 3D reconstruction of complex underwater scenes. A preprint of the paper is available on arXiv, the corresponding code is available on GitHub, and more details are illustrated in the accompanying video attachment (shown above). This research was led by Ivana Collado-Gonzalez. The second paper, "DRACo-SLAM2: Distributed Robust Acoustic Communication-efficient SLAM for Imaging Sonar Equipped Underwater Robot Teams with Object Graph Matching," improves upon our original DRACo-SLAM framework presented at IROS 2022. A preprint of the paper is available on arXiv, the corresponding code is available on GitHub, and more details are illustrated in the accompanying video attachment. This research was led by Yewei Huang. The third paper, "CVD-SfM: A Cross-View Deep Front-end Structure-from-Motion System for Sparse Localization in Multi-Altitude Scenes," proposes a Structure from Motion framework intended for robot imagery collected at different altitudes. A preprint of the paper is available on arXiv, the corresponding code is available on GitHub, and more details are illustrated in the accompanying video attachment. This research was led by Yaxuan Li.

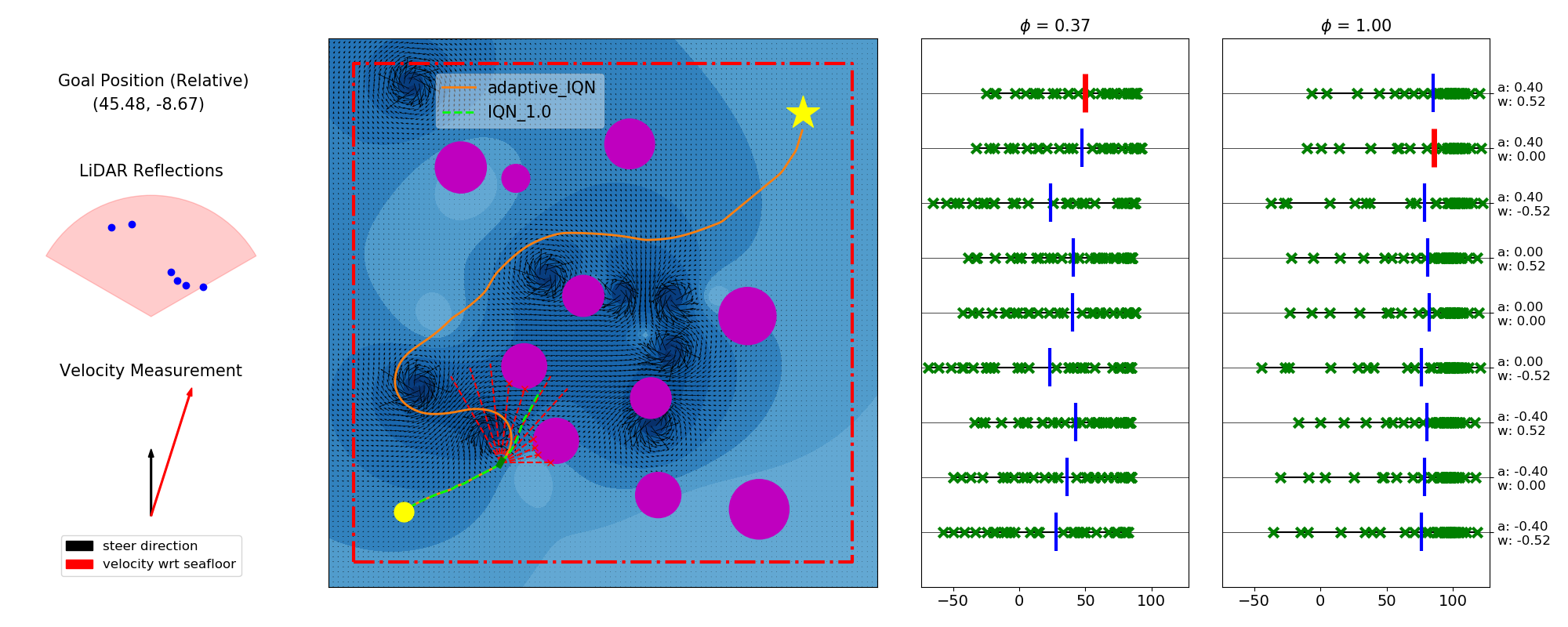

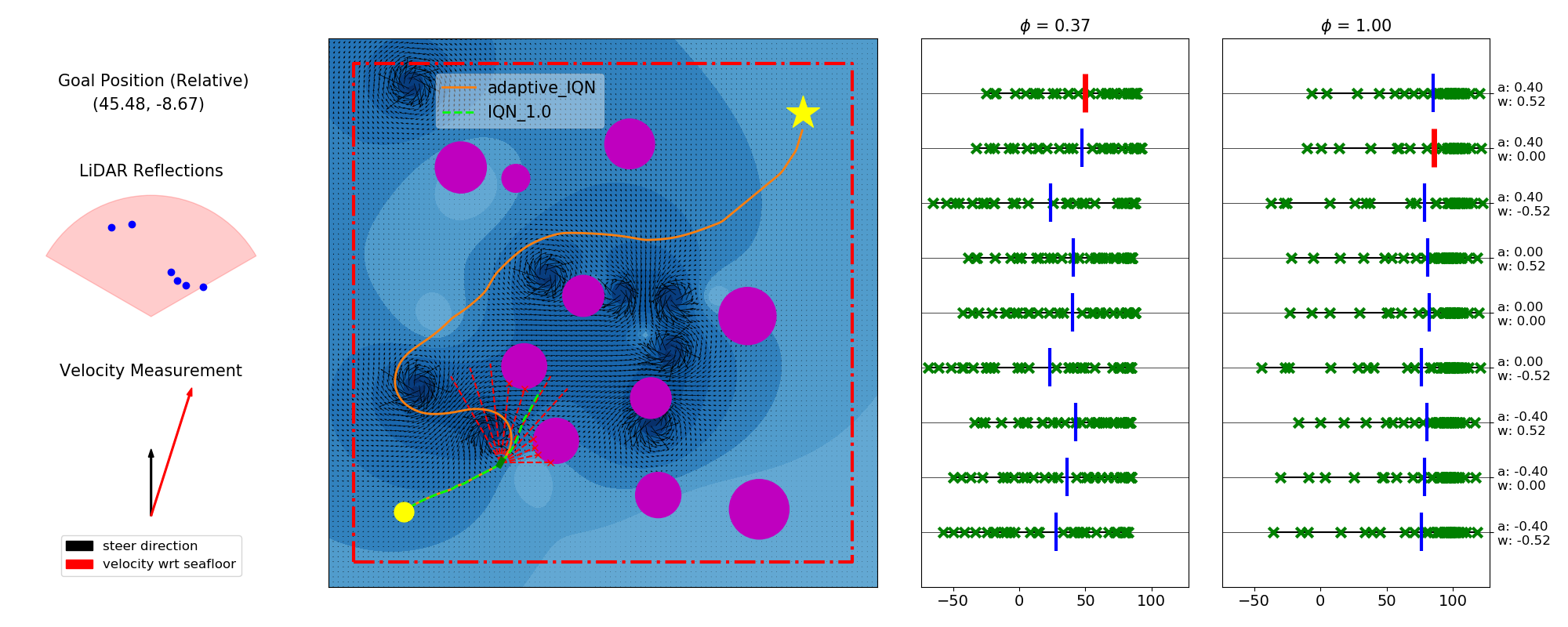

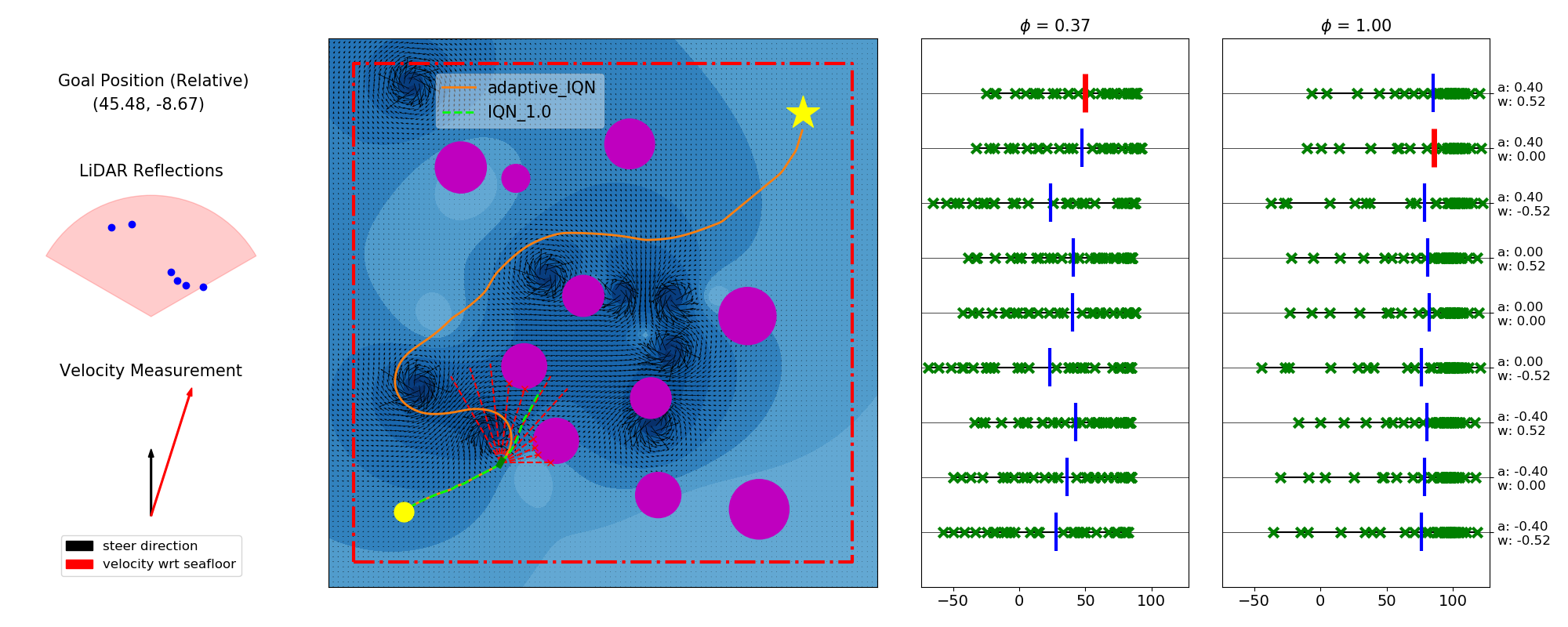

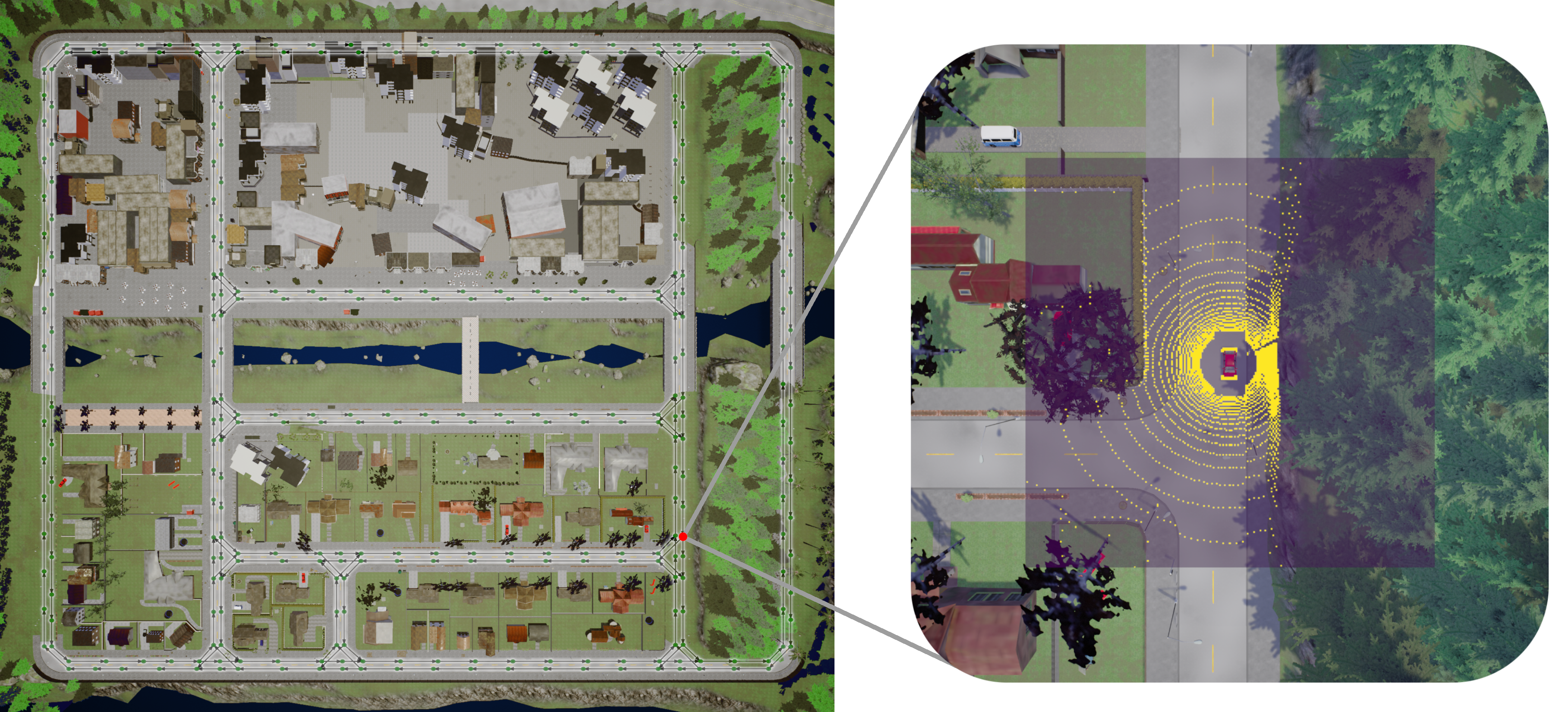

Low-Fidelity-Sim 2 High-Fidelity-Sim: Autonomous Navigation in Congested Maritime Environments via Distributional Reinforcement Learning

We are pleased to announce that our paper "Distributional Reinforcement Learning based Integrated Decision Making and Control for Autonomous Surface Vehicles" will appear in the February 2025 issue of IEEE Robotics and Automation Letters (Link to IEEExplore). A preprint of our paper is available on arXiv, and our code is available on GitHub. This work was led by Xi Lin.

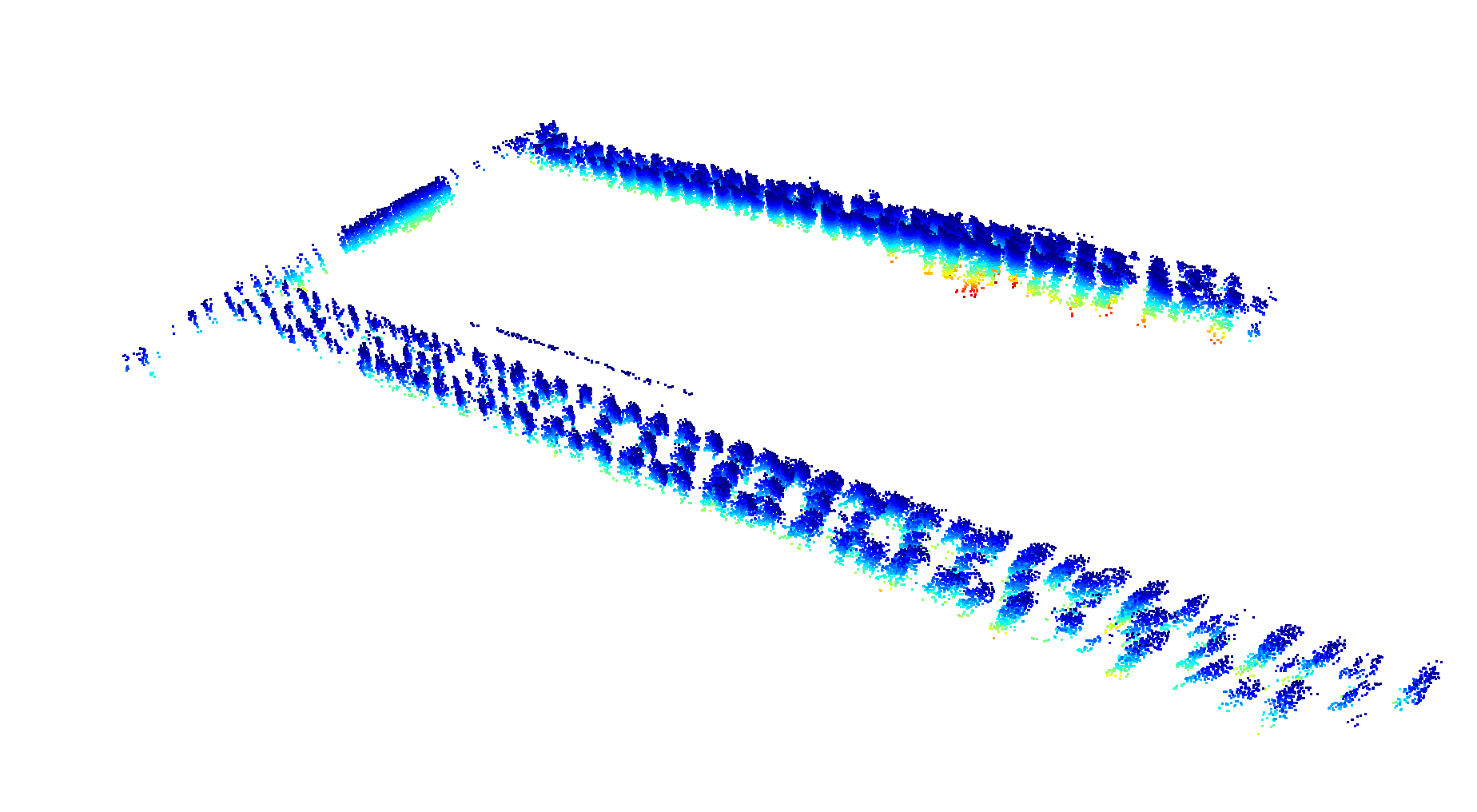

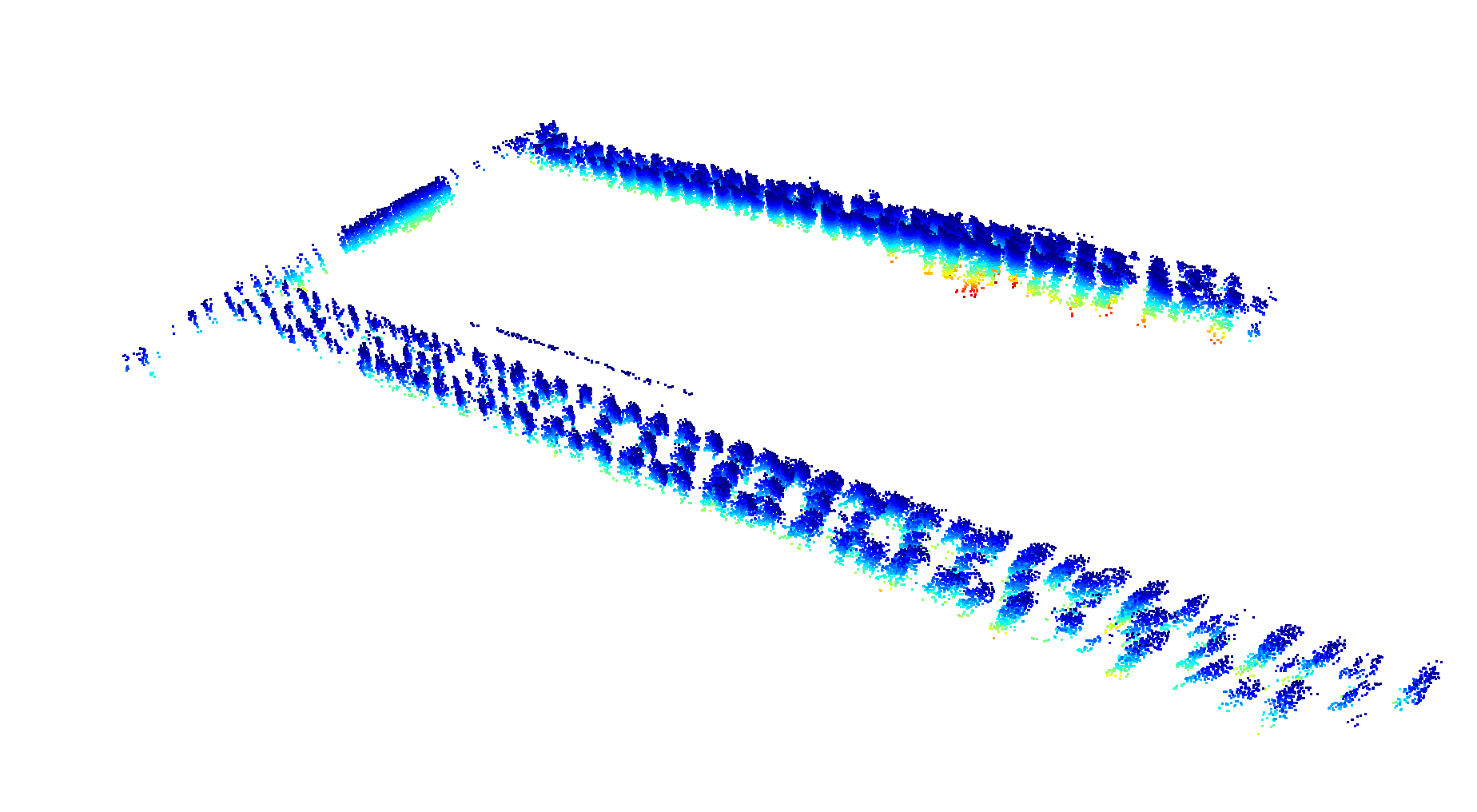

Large-Scale Underwater 3D Mapping with a Stereo Pair of Imaging Sonars

We are excited to announce that a paper telling the complete story of our lab's work with dense 3D underwater mapping using a stereo pair of (orthogonally oriented) imaging sonars has been published in the IEEE Journal of Oceanic Engineering (a preprint of the paper is available on arXiv). This work,

led by John McConnell, was demonstrated via field experiments conducted at Joint Expeditionary Base Little Creek in VA (pictured above, alongside a sonar-derived 3D map of the structures visible in the photo), Penn's Landing in Philadelphia, PA, and SUNY Maritime College in Bronx, NY, and builds on our earlier papers published at IROS 2020 (McConnell, Martin and Englot) and ICRA 2021 (McConnell and Englot).

The custom-instrumented BlueROV underwater robot used in this work, and its mapping capabilities, were recently highlighted in a both a video filmed by ASME, and in a Stevens news article.

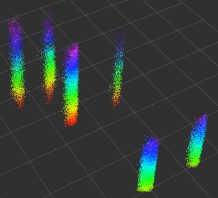

Mobile Manipulation for Inspecting Electric Substations

We are pleased to announce that our paper "Robust Autonomous Mobile Manipulation for Substation Inspection" has been published in the ASME Journal of Mechanisms and Robotics,

in its special issue on Selected Papers from IDETC-CIE. The paper can be accessed in ASME's Digital Collection,

and more details are illustrated in the accompanying video attachment (shown above). This research was

led by Erik Pearson.

ICRA 2024 Papers on Autonomous Navigation under Uncertainty

We recently presented three papers at ICRA 2024 addressing autonomous navigation under different types of uncertainty. The first paper, "Decentralized Multi-Robot Navigation for Autonomous Surface Vehicles with Distributional Reinforcement Learning,"

addresses ASV navigation in congested and disturbance-filled environments. A preprint of the paper is available on arXiv, the corresponding code is available on GitHub,

and more details are illustrated in the accompanying video attachment (shown above). This research was

led by Xi Lin.

The second paper, "Multi-Robot Autonomous Exploration and Mapping Under Localization Uncertainty with Expectation-Maximization,"

uses virtual maps to support high-performance multi-robot exploration of unknown environments. A preprint of the paper is available on arXiv, the corresponding code is available on GitHub,

and more details are illustrated in the accompanying video attachment. This research was

led by Yewei Huang.

The third paper, "Real-Time Planning Under Uncertainty for AUVs Using Virtual Maps,"

also uses virtual maps, as a tool to support planning under localization uncertainty across long distances underwater. A preprint of the paper is available on arXiv

and more details are illustrated in the accompanying video attachment. This research was

led by Ivana Collado-Gonzalez.

New Papers and Code on Distributional Reinforcement Learning

ICRA 2024 Papers on Autonomous Navigation under Uncertainty

We recently presented three papers at ICRA 2024 addressing autonomous navigation under different types of uncertainty. The first paper, "Decentralized Multi-Robot Navigation for Autonomous Surface Vehicles with Distributional Reinforcement Learning," addresses ASV navigation in congested and disturbance-filled environments. A preprint of the paper is available on arXiv, the corresponding code is available on GitHub, and more details are illustrated in the accompanying video attachment (shown above). This research was led by Xi Lin. The second paper, "Multi-Robot Autonomous Exploration and Mapping Under Localization Uncertainty with Expectation-Maximization," uses virtual maps to support high-performance multi-robot exploration of unknown environments. A preprint of the paper is available on arXiv, the corresponding code is available on GitHub, and more details are illustrated in the accompanying video attachment. This research was led by Yewei Huang. The third paper, "Real-Time Planning Under Uncertainty for AUVs Using Virtual Maps," also uses virtual maps, as a tool to support planning under localization uncertainty across long distances underwater. A preprint of the paper is available on arXiv and more details are illustrated in the accompanying video attachment. This research was led by Ivana Collado-Gonzalez.

New Papers and Code on Distributional Reinforcement Learning

We have released two new code repositories with tools that have supported our research on Distributional Reinforcement Learning.

Our paper on Robust Unmanned Surface Vehicle (USV) Navigation with Distributional RL will be appearing at IROS 2023 in October.

A preprint of the paper is available on arXiv, the corresponding code is available on GitHub,

and more details are illustrated in the accompanying video attachment. This research was

led by Xi Lin.

A second paper on Robust Route Planning with Distributional RL in a Stochastic Road Network Environment appeared earlier this summer at Ubiquitous Robots 2023. A preprint of the paper is available on arXiv, and the accompanying code, which provides Stochastic Road Networks derived from maps in the CARLA Simulator, is available on GitHub. This research was led by Xi Lin, Paul Szenher, and John D. Martin.

Underwater Robotics Research Highlighted by The American Society of Mechanical Engineers (ASME)

Our underwater robotics research was recently highlighted in ASME's new series on "What's in your lab?". ASME filmed a 3D sonar mapping experiment performed with one of our customized BlueROV robots, which was led by John McConnell and Ivana Collado-Gonzalez. The experiment is part of an effort to enhance the capabilities described in an earlier paper published at IROS (McConnell, Martin and Englot, IROS 2020), and will be documented in a new paper that is currently in preparation.

Active Perception with the BlueROV Underwater Robot

We are excited to share some news about our "most autonomous" robot deployed in the field to date, which used sonar-based active SLAM to autonomously explore and map an obstacle-filled harbor environment with high accuracy. To achieve this, we adapted our algorithms for Expectation-Maximization based autonomous mobile robot exploration published at ISRR (Wang and Englot, ISRR 2017) and IROS (Wang, Shan and Englot, IROS 2019) to run on our BlueROV underwater robot, which uses its imaging sonar for SLAM. This work was performed by Jinkun Wang with the help of Fanfei Chen, Yewei Huang, John McConnell, and Tixiao Shan, and it was recently published in the October 2022 issue of the IEEE Journal of Oceanic Engineering. A preprint of our paper can be found on arXiv, and a recent seminar discussing our work on this topic can be viewed here. Our BlueROV SLAM code used to support this work, along with sample data, is available on GitHub.

Introducing DRACo-SLAM: Distributed, Multi-Robot Sonar-based SLAM Intended for use with Wireless Acoustic Comms

We are excited to announce that our recent work on DRACo-SLAM (Distributed Robust Acoustic Communication-efficient SLAM for Imaging Sonar Equipped Underwater Robot Teams) will be presented at IROS 2022. A preprint of our paper is available on arXiv, and the DRACo-SLAM library is available on GitHub. This work was led by John McConnell.

Using Overhead Imagery of Ports and Harbors to Aid Underwater Sonar-based SLAM

We are excited to announce that our recent work proposing Overhead Image Factors for underwater sonar-based SLAM has been accepted for publication in IEEE Robotics and Automation Letters, and for presentation at ICRA 2022. Our paper is available on IEEExplore.

This work uses deep learning to predict the above-surface appearance of underwater objects observed by sonar, which is registered against the contents of overhead imagery to provide an absolute position reference for underwater robots operating in coastal areas. This research was led by John McConnell.

Introducing DiSCo-SLAM: A Distributed, Multi-Robot LiDAR SLAM Code/Data Release

We are excited to announce that our recent work on DiSCo-SLAM (Distributed Scan Context-Enabled Multi-Robot LiDAR SLAM with Two-Stage Global-Local Graph Optimization) has been accepted for publication in IEEE Robotics and Automation Letters, and for presentation at ICRA 2022. Our paper is available on IEEExplore.

The DiSCo-SLAM library, along with two new multi-robot SLAM datasets intended for use with DiSCo-SLAM, are available on GitHub. This work, which was also featured recently on the Clearpath Robotics Blog, was led by Yewei Huang.

Zero-Shot Reinforcement Learning on Graphs for Autonomous Exploration

We are excited that our paper "Zero-Shot Reinforcement Learning on Graphs for Autonomous Exploration Under Uncertainty" has been accepted for presentation at ICRA 2021. In the video above, which assumes a lidar-equipped mobile robot depends on segmentation-based SLAM for localization, we show the exploration policy learned by training in a single Gazebo environment, and its successful transfer both to other virtual environments and to robot hardware. A preprint of the paper is available on arXiv, our presentation of the paper can be viewed here. This work was led by Fanfei Chen.

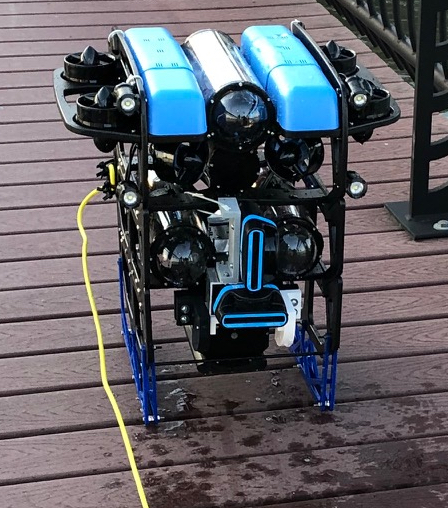

Predictive Large-Scale 3D Underwater Mapping with Sonar

We are pleased to announce that our paper on predictive large-scale 3D underwater mapping using a pair of wide-aperture imaging sonars has been accepted for presentation at ICRA 2021. This work features our custom-built heavy configuration BlueROV underwater robot, which is equipped with two orthongally oriented Oculus multibeam sonars (the software packages for our BlueROV can be found on GitHub). A preprint of the paper is available on arXiv, and our presentation of the paper can be viewed here. This work was led by John McConnell.

Lidar-Visual-Inertial Navigation, and Imaging Lidar Place Recognition

Two collaborative works with MIT, led by lab alumnus Dr. Tixiao Shan and featuring data gathered with our Jackal UGV, will be appearing at ICRA 2021. The first, shown above, is LVI-SAM, a new

framework for lidar-visual-inertial navigation. A preprint of the paper is available on arXiv, a presentation

of the paper can be viewed here, and the LVI-SAM library is available on GitHub.

The second work, shown above, proposes a new framework for place recognition using imaging lidar, which is implemented using the Ouster OS1-128 lidar, operated in both a hand-held mode and aboard Stevens' Jackal UGV. A preprint of the paper is available on arXiv, a presentation of the paper can be viewed here, and we encourage you to download the library from GitHub.

Lidar Super-resolution Paper and Code Release

We have developed a framework for lidar super-resolution that is trained completely using synthetic data from the CARLA Urban Driving Simulator. It is capable of accurately enhancing the apparent resolution of a physical lidar across a wide variety of real-world environments. Our paper on this work was recently published in Robotics and Autonomous Systems, and we encourage you to download our library from GitHub. The author and maintainer of this library is Tixiao Shan.

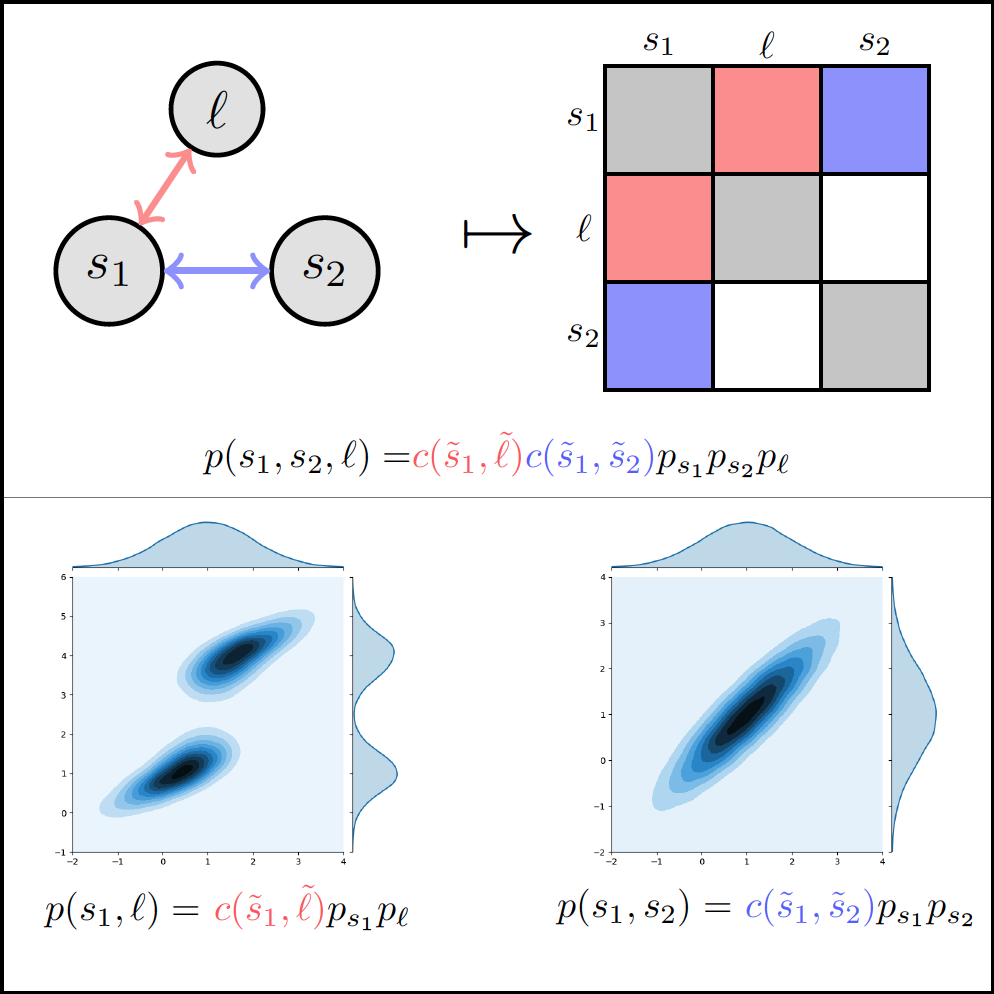

Copula Models for Capturing Probabilistic Dependencies in SLAM

We are happy to announce that our paper on using copulas for modeling the probabilistic dependencies in simultaneous localization and mapping (SLAM) with landmarks has been accepted for presentation at IROS 2020. A preprint of the paper "Variational Filtering with Copula Models for SLAM" is available on arXiv and our presentation of the paper can be viewed here. This collaborative work with MIT was led jointly by John Martin and lab alumnus Kevin Doherty.

Autonomous Exploration using Deep Reinforcement Learning on Graphs

We are pleased to announce that our paper "Autonomous Exploration Under Uncertainty via Deep Reinforcement Learning on Graphs" has been accepted for presentation at IROS 2020. In the video above, which assumes a range-sensing mobile robot depends on the observation of point landmarks for localization, we show the performance of several competing architectures that combine deep RL with graph neural networks to learn how to efficiently explore unknown environments, while building accurate maps. A preprint of the paper is available on arXiv, our presentation of the paper can be viewed here, and we encourage you to download our code from GitHub. This work was led by Fanfei Chen, who is the author and maintainer of the "DRL Graph Exploration" library.

Dense Underwater 3D Reconstruction with a Pair of Wide-aperture Imaging Sonars

We are pleased to announce that our paper on dense underwater 3D reconstruction using a pair of wide-aperture imaging sonars has been accepted for presentation at IROS 2020. This work features our custom-built heavy configuration BlueROV underwater robot, which is equipped with two orthongally oriented Oculus multibeam sonars (the software packages for our BlueROV can be found on GitHub). A preprint of the paper is available on arXiv, and our presentation of the paper can be viewed here. This work was led by John McConnell.

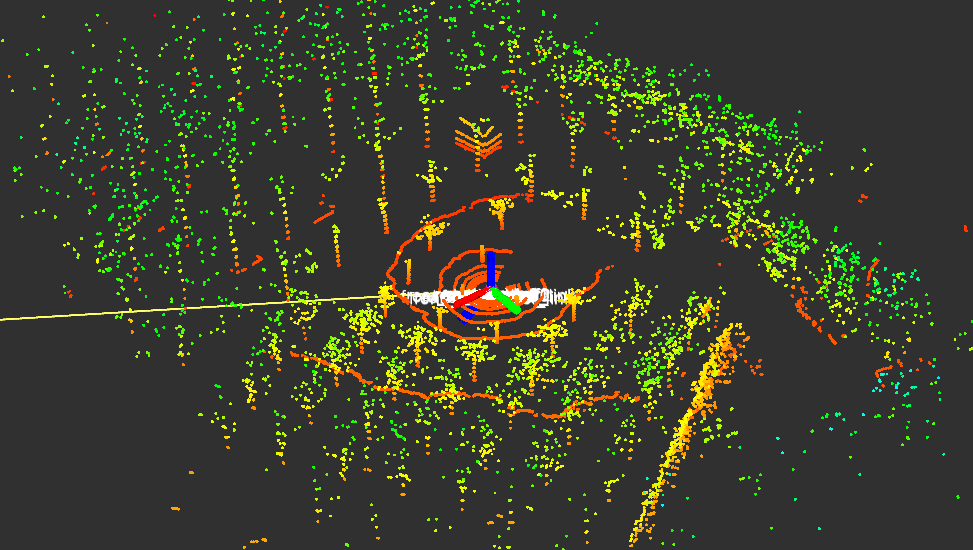

Lidar Inertial Odometry via Smoothing and Mapping (LIO-SAM)

We recently brought our Jackal UGV to a nearby park to perform some additional validation of LIO-SAM, a framework for tightly-coupled lidar inertial odometry which will be presented at

IROS 2020. A preprint of the paper is available on arXiv, a presentation

of the paper can be viewed here, and we encourage you to download

the library from GitHub.

This collaborative work with MIT was led by lab alumnus Dr. Tixiao Shan, who is the author and maintainer of the LIO-SAM library.

We also recently mounted the new 128-beam Ouster OS1-128 lidar on our Jackal UGV, and performed some additional LIO-SAM mapping on the Stevens campus (all earlier results have been gathered using the 16-beam Velodyne VLP-16). It was encouraging to see LIO-SAM support real-time operation despite the greatly-increased sensor resolution.

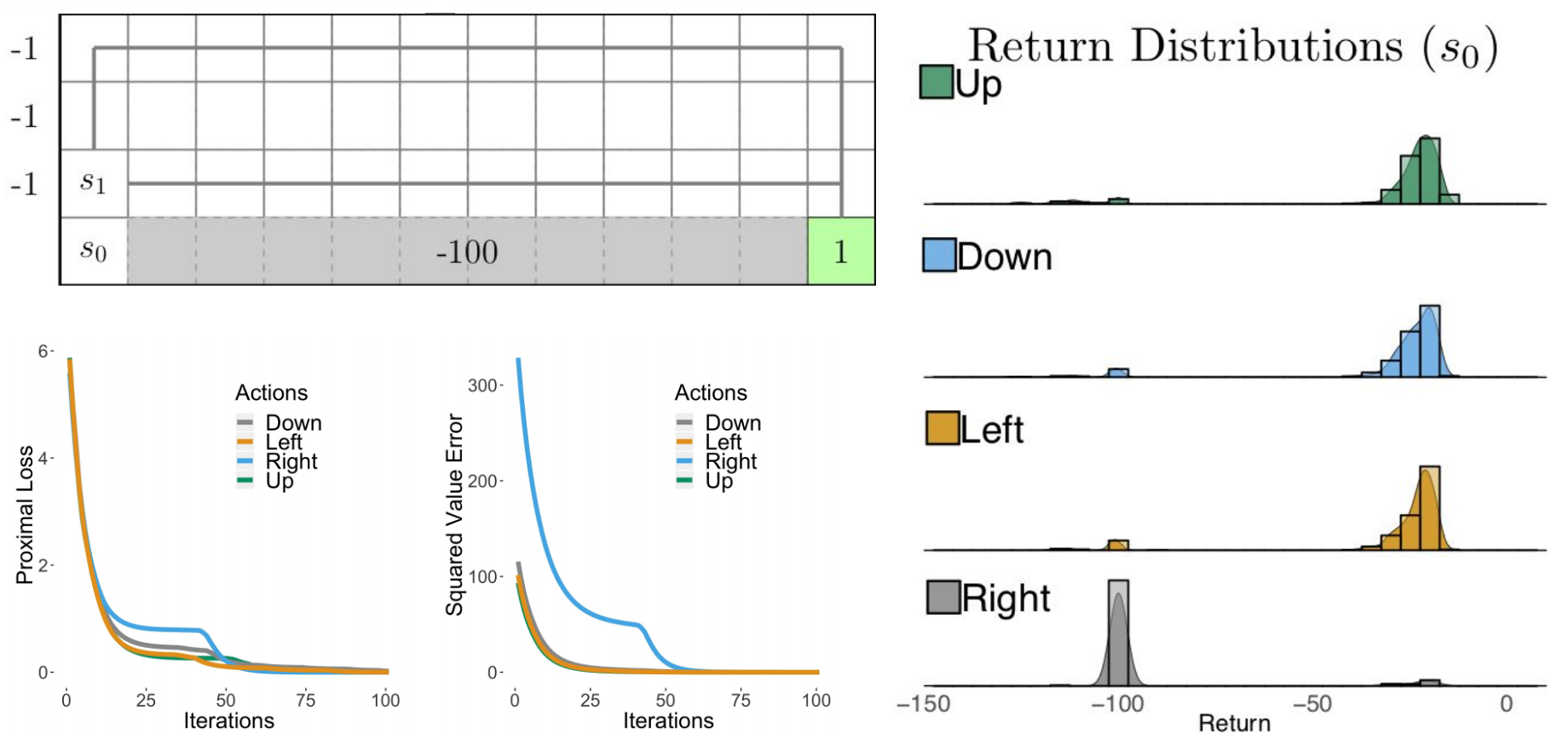

Stochastically Dominant Distributional Reinforcement Learning

We are happy to announce that our paper on risk-aware action selection in distributional reinforcement learning has been accepted for presentation at the 2020 International Conference on Machine Learning (ICML). A preprint of the paper "Stochastically Dominant Distributional Reinforcement Learning" is available on arXiv, and our presentation of the paper can be viewed here. This work was led by John Martin.

Sonar-Based Detection and Tracking of Underwater Pipelines

At ICRA 2019's Underwater Robotics Perception Workshop, we recently presented our work on deep learning-enabled detection and tracking of underwater pipelines using multibeam imaging sonar, which is collaborative research with our colleagues at Schlumberger. In the above video, our BlueROV performs an automated flyover of a pipeline placed in Stevens' Davidson Laboratory towing tank. Our paper describing this work is available here.

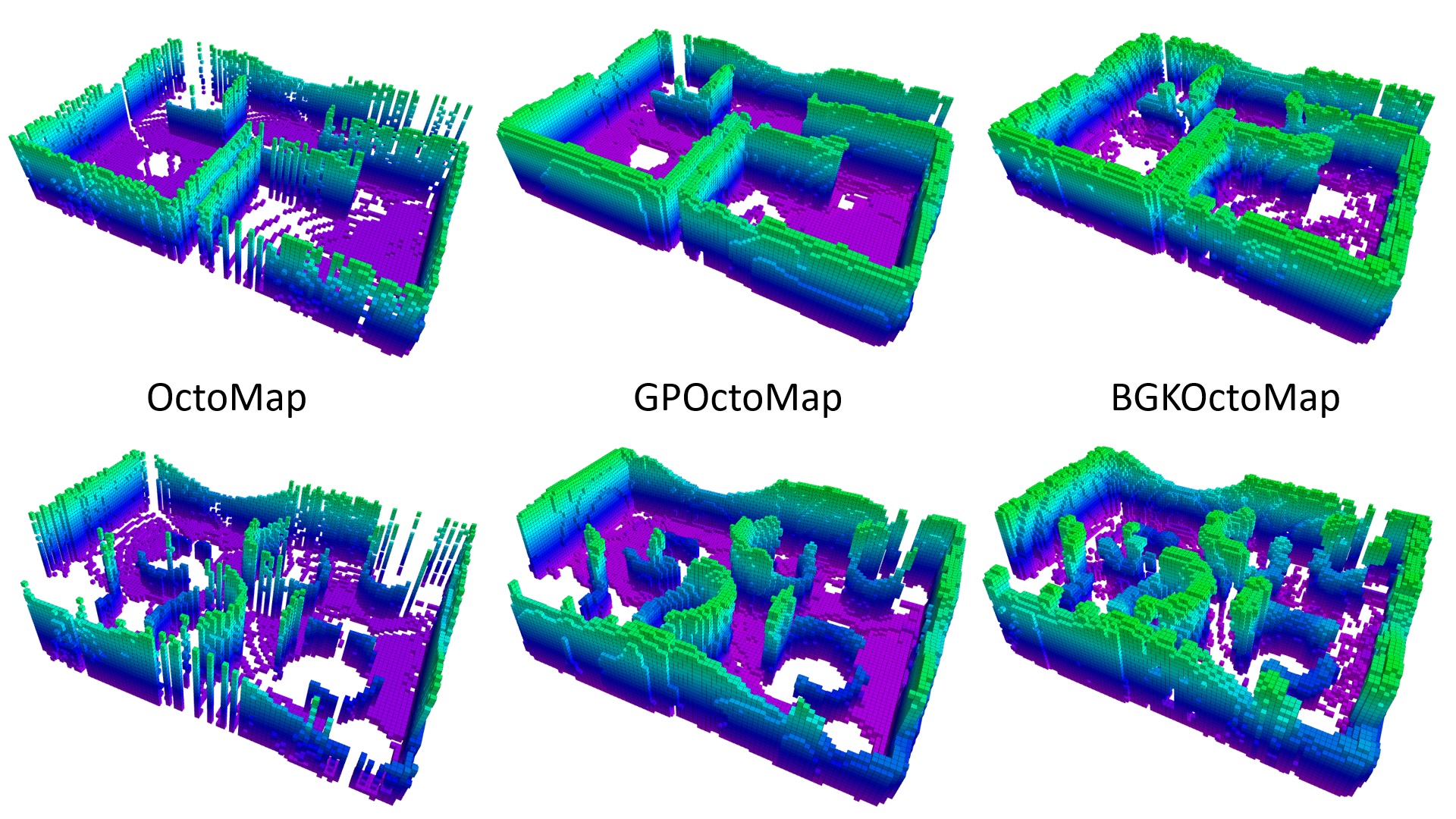

Learning-Aided Terrain Mapping Code Release

We have developed a terrain mapping algorithm that uses Bayesian generalized kernel (BGK) inference for accurate traversability mapping under sparse Lidar data. The BGK terrain mapping algorithm was presented at the 2nd Annual Conference on Robot Learning. We encourage you to download our library from GitHub. A specialized version for ROS supported unmanned ground vehicles, which includes Lidar odometry and motion planning, is also available on GitHub. The author and maintainer of both libraries is Tixiao Shan.

Marine Robotics Research Profiled by NJTV News

NJTV News recently joined us for a laboratory experiment with our BlueROV underwater robot where we tested its ability to autonomously track an underwater pipeline using deep learning-enabled segmentation of its sonar imagery. The full article describing how this work may aid the inspection of New Jersey's infrastructure is available at NJTV News.

LeGO-LOAM: Lightweight, Ground-Optimized Lidar Odometry and Mapping

We have developed a new Lidar odometry and mapping algorithm intended for ground vehicles, which uses small quantities of features and is suitable for computationally lightweight, embedded systems applications. Ground-based and above-ground features are used to solve different components of the six degree-of-freedom transformation between consecutive Lidar frames. The algorithm was presented earlier this year at the University of Minnesota's Roadway Safety Institute Seminar Series. We are excited that LeGO-LOAM will appear at IROS 2018! We encourage you to download our library from GitHub. The author and maintainer of this library is Tixiao Shan.

3D Mapping Code Release - The Learning-Aided 3D Mapping Library (LA3DM)

Autonomous Navigation with Jackal UGV

We have developed terrain traversability mapping and autonomous navigation capability for our LIDAR-equipped Clearpath Jackal Unmanned Ground Vehicle (UGV). This work by Tixiao Shan was recently highlighted on the Clearpath Robotics Blog.